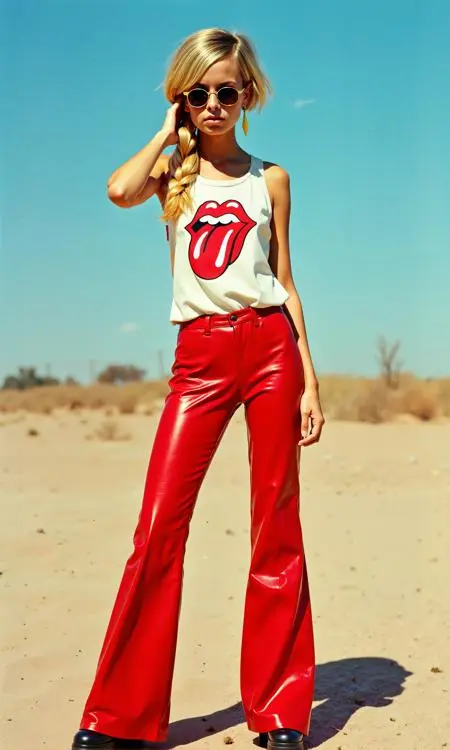

Twiggy [2.3MB 单变压器Flux LoRa]

0

0

0

0

PhotographyRealisticGirl

v1.0

최근 업데이트: 첫 번 게시:

The LoRa was trained on just two single transformer blocks with a rank of 32, which allows for such a small file size to be achieved without any loss of quality.

Since the LoRa is applied to only two blocks, it is less prone to bleeding effects. Many thanks to 42Lux for their support.

토론

가장 인기

|

최신

보내기

곧 오픈

다운로드

(0.00KB)

자세히

유형

온라인 생성 횟수

0

다운로드

0

추천 매개변수Add

갤러리

가장 인기

|

최신