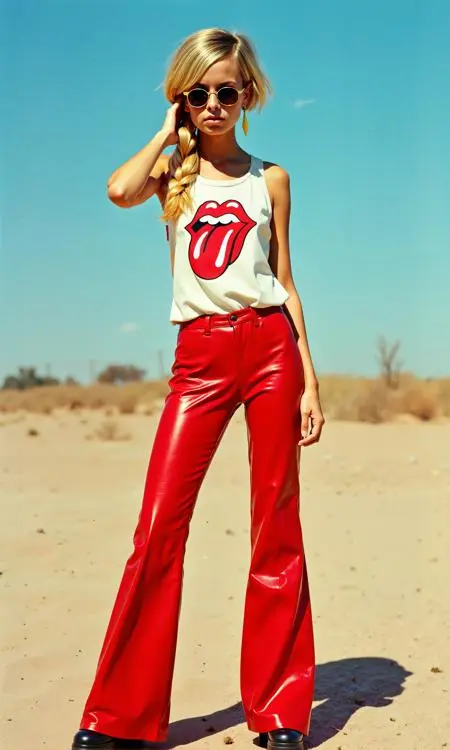

Twiggy [2.3MB 单变压器Flux LoRa]

0

0

0

0

PhotographyRealisticGirl

v1.0

Dikemaskini Baru-baru Ini: Diterbitkan Pertama:

The LoRa was trained on just two single transformer blocks with a rank of 32, which allows for such a small file size to be achieved without any loss of quality.

Since the LoRa is applied to only two blocks, it is less prone to bleeding effects. Many thanks to 42Lux for their support.

Perbincangan

Paling Popular

|

Terkini

Hantar

Segera Hadir

Muat Turun

(0.00KB)

Butiran

Jenis

Kiraan Penerapan Dalam Talian

0

Muat Turun

0

Parameter DisyorkanAdd

Galeri

Paling Popular

|

Terkini